Immersive Gaming: The Challenge.Presented at Sydney Games Forum, University of Technology Sydney.Written by Paul Bourke May 2009

For many game genres it is desirable for the enjoyment and engagement of the game, but also game performance, to attempt to immerse the player in the virtual environment. That is, to facilitate a suspension of belief such that the player experiences a heightened sense of being in the games virtual world. This sense of immersion obviously depends upon a number of factors, not the least of which is the subject matter, goals, and interactions experienced in the game play. Recently with the rapid rise in graphics performance available on the desktop there has been significant effort to increase the fidelity of the visuals. Immersion is also dependent on how the game is interfaced to the human player and it will be the way the human senses may be stimulated that will discussed here. It is through the stimulation of our senses that we experience in our real world so it is reasonable to assume our sense of immersion in a virtual environment will be heightened if the same senses are engaged. As humans the key way we experience the world around us is with our visual system, this is followed by our other senses of hearing, touch, smell, and taste. It is therefore not unexpected that the main way we experience a 3D gaming world in through our visual system, but what about our other senses? While taste would seem to have limited application to gaming one might imagine being able to sample products at a food stall in a virtual village, or eat fruit off a tree. The difficulty is that the sense taste requires the feel of the food in the mouth along with the release of chemicals and even the sounds of the food being chewed. Unfortunately the most likely means of achieving a sense of taste will require direct brain stimulation and there are no clear technologies yet available. Similarly the sense of smell could significantly enhance the gaming experience [Barfield, 1995], to be able to smell the dragons breath, sniff a flower, or smell smoke from the trolls fireplace before it was even visible. Unfortunately the technologies to realise these dreams are yet to be developed in an affordable form. They have been used in therapeutic virtual reality and the fidelity is reasonably high but the technology is extremely involved. Perhaps the most interesting technology is an odour canon [Yanagida, 2004] that while currently limited to a small range of distinctive odours can even target individuals with a specific smell. The sense of touch likewise would enhance many online experiences [Klein, 2005]. There are a plethora of technologies for providing feedback to our sense of touch. At the moment we are limited to rather crude vibrational effects within controllers and force feedback input devices. Still a long way from feeling the sea breeze on ones face when standing on the beach, or feeling the roughness of horses hair, the heat of the oven, or prick from a spear point. When we reach our sense of hearing, finally some good news. The technology exists to provide the player with sophisticated spatial audio and high fidelity 3D acoustic environment. While these are not always employed it is largely a matter of budget and a decision made by the player who may decide that a simple stereo system provides a rich enough environment. While a higher fidelity system may not improve their game play performance it may certainly result in an improved game experience, we enjoy if only subconsciously ambient sounds such as the wind in the trees and the chirping birds of birds. This brings us back to our visual system, our eyes. Surprisingly while the technology exists to engage the unique characteristics of our visual system, in general it isn't being exploited. There are three characteristics of our visual system that are often neglected.

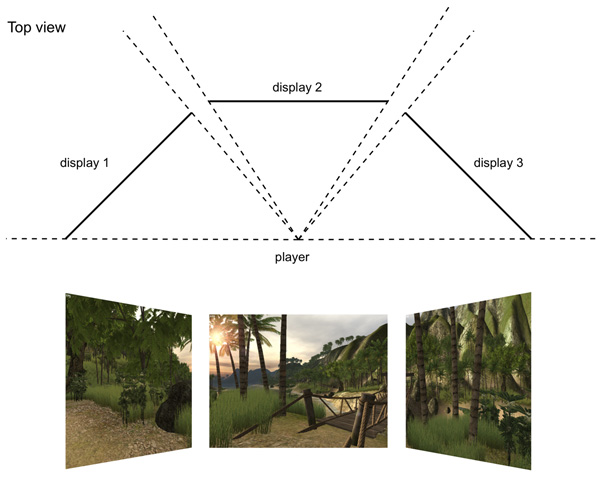

Acuity and dynamic range.Personal computer displays exist which come close to the visual acuity capabilities of the human visual system. Unfortunately in many cases games are not using the full resolution of the display, this is generally for performance reasons where a high resolution display at HD or greater resolution is still a strain on the graphics performance, this is related of course to the attempts at higher fidelity rendering which push the latest graphics cards to their limit. It is expected in time that visual quality, and the image resolution at which that quality can be maintained, will continue to rise as the graphics hardware continues to improve. Of more interest is the extremely limited dynamic range of modern displays. Indeed the move away from CRT based displays has lead to a reduction of the dynamic range. The bottom line is that 8 bits per r,g,b per pixel is woefully inadequate to represent the range of colours and intensities we experience in everyday life. While high dynamic range displays certainly have been developed, there are no commodity based models in the near future and they require an entirely different colour space API. StereopsisThe 3D depth perception we experience in everyday life is due to each eye getting a slightly different view of the world, a view from horizontally offset eyes. When engaging with 3D graphics on a normal computer display, each eye is getting the same view of the 3D world, the 3D information therefore appears flat and aligned with the screen surface. There are a number of technologies that can present a 3D world independently to each eye from two horizontally offset positions (two cameras). They include the older frame sequential stereo based upon CRT monitors or projectors, the more common polarisation based projection systems employing modern digital projection, the zero ghosting technology called Infitec that like polaroid based systems employs two data projectors, the newer but expensive digital projectors that can now replicate the older frame sequential stereo techniques, some recent LCD based monitors that exploit polarisation of LCD crystals, to other new technologies that can offer a glasses free stereoscopic experience although at the price of a loss of resolution. There is no shortage of hardware options and indeed many games have support for stereoscopic operation out of the box, generally with wired shutter glasses and frame sequential stereo. There are additionally driver level solutions that allow standard games (those without explicit stereoscopic support) to present a 3D stereoscopic experience. I will argue that very few gamers use these stereoscopic options after the initial novelty has worn off. Very fundamental principles mean that every stereoscopic viewing system introduces a level of eye strain [Miyashita, 1990]. There are obvious contributors to eye strain such as ghosting (the degree to which the image for one eye leaks into the other eye). And it should be pointed out that commodity based glasses and monitors tend to be at the lower end of the quality scale and this have higher ghosting levels than "professional" glasses. However even in a perfect zero ghosting system such as Infitec there is a conflict in the accommodation depth cues that will always exist, the muscle tension for focus is always at the screen depth rather than the object depth [Yamazaki, 1990]. It has been well known for some time in the professional simulation, training and visualisation industries that use stereoscopic 3D displays, that if a human is to use the technology for long periods (say more than 1 hour) then very careful consideration has to be given to the quality of the display technologies. 1 hour in a gaming experience is not very long at all and the commodity based stereoscopic systems are of very poor quality compared to the more professional systems. The other reason why few gamers find the need to regularly use stereoscopic options is that there simply isn't any significant gaming advantage and yet there are real disadvantages such as eye strain from prolonged use. The estimation of depth in an action game for example is largely irrelevant, it matters not that a missile launched from an opponent is 10m or 20m away, one simply needs to get out of the way. Couple this with the fact that the stereoscopic settings employed by most games do not give correct depth perception but only relative depth perception, further mitigates any gaming advantage. Peripheral vision.Besides the depth perception we enjoy from having two eyes, our visual system has another important characteristic that, like stereopsis, is not employed when looking at a 3D world on a single flat computer screen. Namely we have a very wide horizontal field of view (almost 180 degrees) and a moderately wide vertical field of view (around 120 degrees). In relation to gaming it will be argued that unlike stereopsis, peripheral vision could provide the player with significant gaming advantage [Hillis, 1999]. Our peripheral vision is very sensitive to motion and thus is attuned to detecting potentially dangerous activity in our far field of view. Indeed peripheral vision in most animals has evolved for exactly this purpose. In the case of humans for detecting lions sneaking up on our ancestors on the savannah of Africa who may be considered by the lion as a hunting target. It is therefore easy to believe that engaging the players peripheral vision for competitive games within a 3D world would lead to a gaming advantage. The reasons are similar to those used to justify the use of surround graphical environments for simulation and other performance and training related virtual reality applications. Strange then that while games often offer stereoscopic support, they as a whole have very poor (if any) support for the players peripheral vision. This is despite the relative simplicity by which some displays that engage our peripheral vision can be built and the fact that graphics hardware is now both readily available and capable of handling what is often required for such displays, namely multiple and additional stages to the graphics pipeline. It should be noted also that while stereoscopic support in most games is such that extended playing is problematic, there are no eye strain issues with wide peripheral vision based displays. There have been some attempts at engaging peripheral vision in games where the additional displays are intended to allow the player to look sideways, for example, to see out the side windows in a car simulator. While entirely valid, the discussion of wide field of view imagery here also considers the case where the player is generally only looking forward, however, as in real life the far field imagery is still valuable. Display technologies that engage our peripheral vision can be classified into a number of categories, each places its own requirement on the capabilities the developer needs to add to the game. It may be noted that I do not include head mounted displays, the omission is intentional since while they can provide a stereoscopic effect they have, on the whole, very narrow field of view and indeed usually give a heightened sense looking through a rectangular tube. Multiple flat separate displays. These are the simplest environments to support since they require nothing more than multiple perspective projections. In terms of hardware they only need as many graphics pipes as there are displays or the screen splitting products such as the Matrox dual and triple head2go converters. In order to maximise horizontal peripheral vision a common configuration might be a central and two side displays, these side displays are angled to give information in the peripheral region. While generally intended for applications requiring a large pixel real estate, there are products [DigitalTigers] that provide single monitor units made up of multiple panels and these have been adopted by some gamers. It should be noted that in order for a game to support these displays it is not sufficient to provide a single extremely wide angle perspective projection, control over the individual view frustums for each display is required.

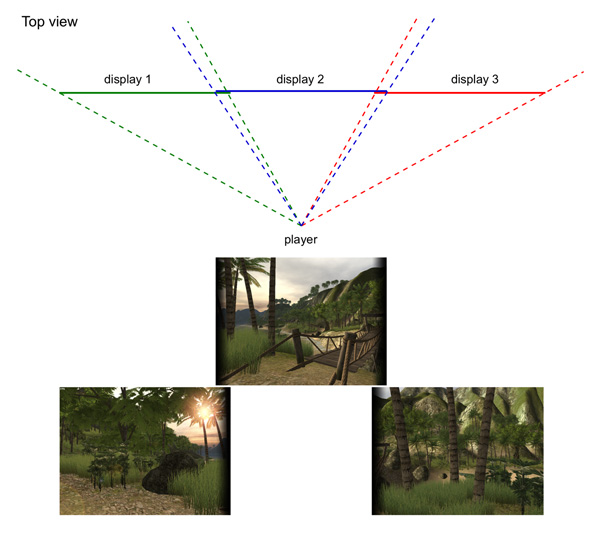

Multiple flat seamless displays. The above is generally suited to multiple discrete displays, such as LCD monitors. A seamless display consisting of multiple projected images requires edge blending, that is, adjacent images overlap and are blended into each other. Edge blending while it exists in some of the high end projection hardware (Barco and Christie for example) tends to be prohibitively expensive. The graphics card based edge blending offered by some drivers is in general very poor quality compared to what can be quite simply achieved in software [Bourke, 2004]. In addition to the edge blending controls the view frustums need to overlap. All these reasons suggest that edge blended displays are best suited to explicit support within the application software.

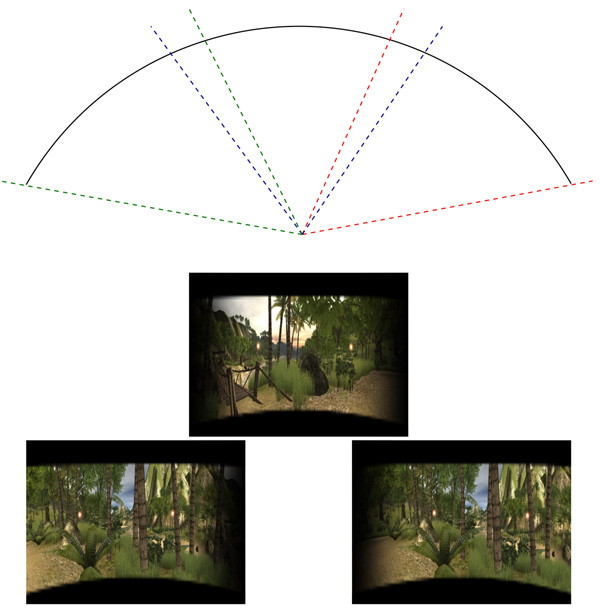

Cylindrical displays. A cylindrical display that wraps around the gamer also requires edge blending [Bourke, 2004b], at least for a seamless display, but it also requires a geometry correction to map the perspective projections (arising from the use of data projectors) onto the cylindrical geometry. Cylindrical displays have been built with angles ranging from as little as 90 degrees to a full 360 degrees, the most common is around 120 degrees. While the various multiple flat panel configurations already mentioned and cylindrical displays can fulfil our desire for horizontal field of view they generally fall short for our vertical field of view. They still provide the player with views of his/her real world rather than engage their visual sense with entirely virtual stimuli.

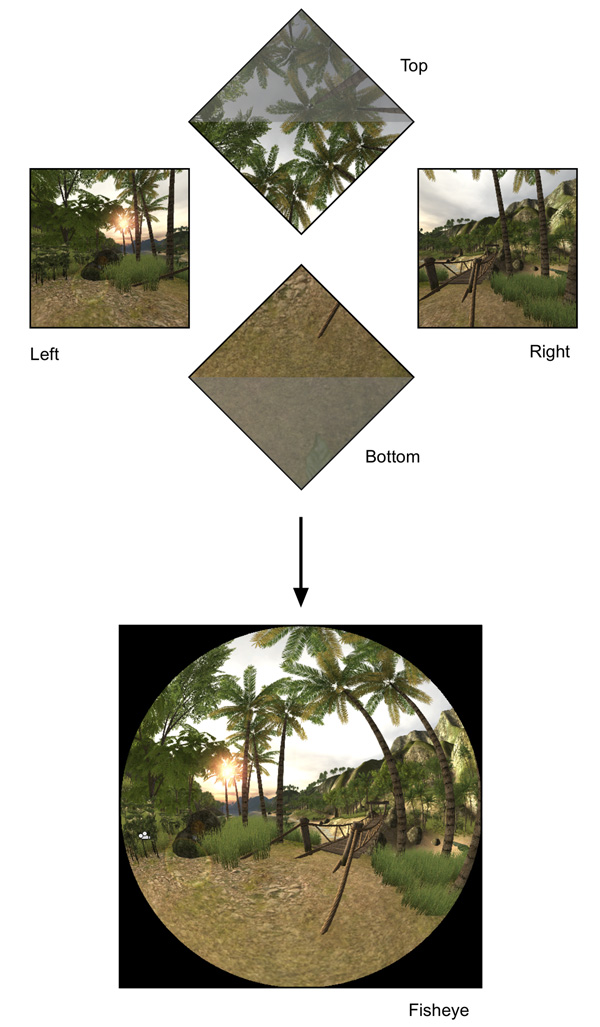

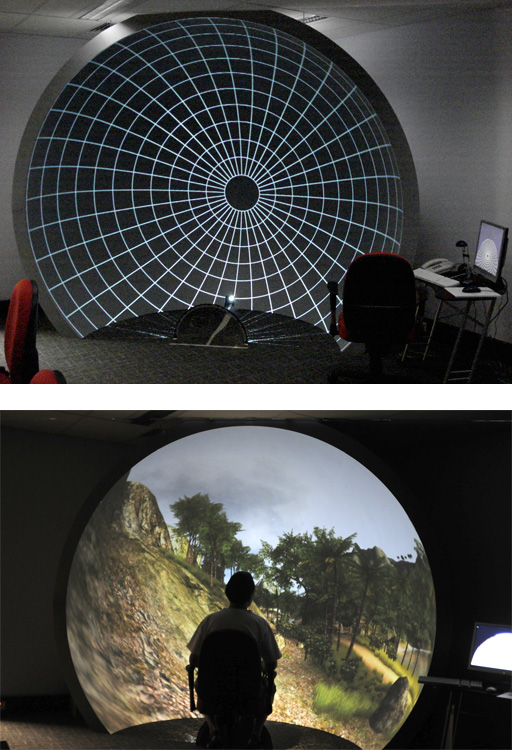

Hemispherical displays. These are perhaps the ultimate since they can fill our field of view both horizontally as well as vertically [Bourke, 2008]. They are most evident perhaps in planetariums, which while they generally concentrate on astronomy, do provide an immersive experience of the night sky. The planetarium visitor (at least in the traditional use of the planetarium) sees only the virtual sky, the dome surface is invisible and the visitors visual system is entirely filled with the virtual. It is this experience that is seeing planetariums worldwide upgrade to digital projection, this allows them to provide immersive experiences based upon subject matter outside their usual astronomy fare. The natural projection for a hemispherical environment is no longer a perspective projection, such a projection can only cope with relatively modest field of views of around 100 degrees before quality is compromised. This hasn't stopped some gamers from simply living with the distortion; examples are the "j-Dome" [jdome] and the "Z- Dome" [GDC, 2008]. These simply wrap a perspective projection across the dome surface so while they engage ones peripheral vision they provide no additional visual information, indeed they present incorrect visual information in the periphery. It is however a testament to the importance of our peripheral vision that even these crude products provide a more engaging experience. However multiple perspective views can be rendered which can then be used to drive a multiple projector arrangement [Kang, 2006] or from which a fisheye projection can be created for a single projector and fisheye lens configuration [Elumenati]. There is now an additional approach, again using a single projector but instead of a fisheye lens to spread light across the dome, a spherical mirror is used [Bourke, 2005]. This approach in comparison to projectors with a fisheye lens is a fraction of the cost for the same image quality. This technology requires an additional rendering stage after the fisheye is created in order to create a geometry corrected image that undoes the distortion incurred by the spherical mirror.

Case study: iDomeThe key design goal of the iDome is to engage the entire visual field of view in a seamless fashion and provide an accurate, geometrically correct image across the whole hemispherical surface. The iDome has been designed with some secondary goals. In order to reduce costs it employs a single data projector. It attempts to achieve a high quality result by using a high definition projector and employs a high quality artefact free hemispherical dome surface. Indeed it is this seamless surface that contributes significantly to the immersive nature since the surface on to which the 3D world is projected become invisible [Bailey, 2006]. If the human visual system no longer sees the graphics as residing on a surface then other depth cues such as shading and motion enable the visual system to derive apparent depth information. The consequence is that players in the iDome often ask why they perceive depth without the necessity of wearing glasses, this is also a common experience patrons report in digital planetariums.

The dimensions of the iDome, 3m diameter with ? of the lower hemisphere truncated, have been chosen as the largest that still allows the structure to reside within a standard stud room, this further extends the availability as a platform for research into immersion and gaming.

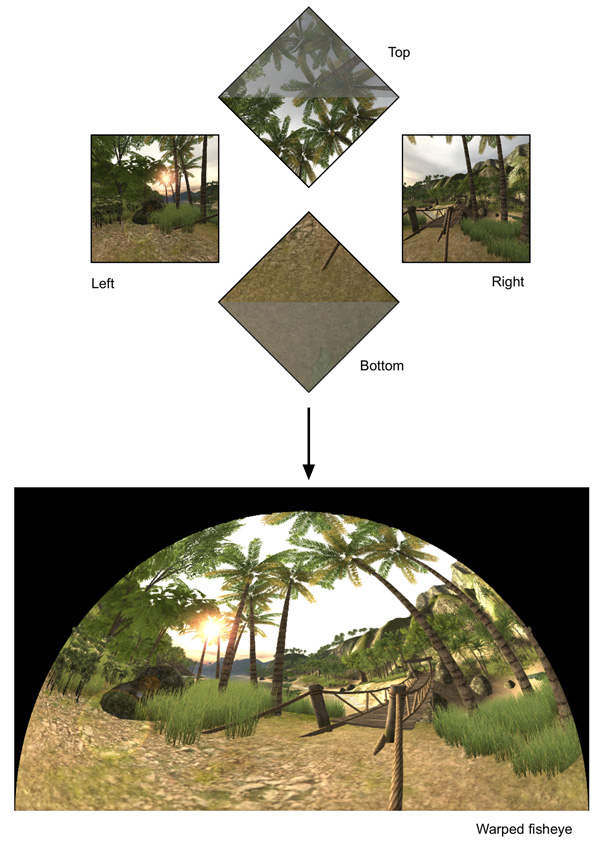

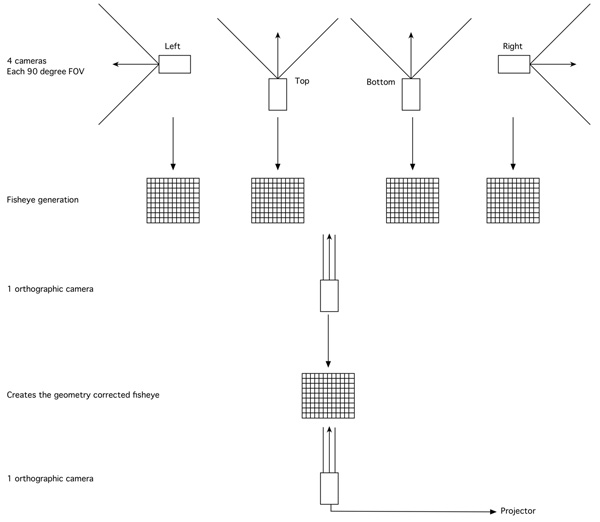

Generating graphics for the iDome by a game or any 3D package is one of the more demanding examples of the type of frustum/camera control discussed earlier. It requires typically 4 render passes, capturing views with frustums that pass through the corners of a cube. These four renders are saved as textures at which point they can be processed in one of two ways

While (2) may seem to be preferable to (1) it turns out that the extra render pass in (1) incurs an insignificant performance penalty with modern graphics cards. Generating the fisheye in (1) can lead to some simplifications in the support for variable hardware configurations, each of which requires a different fisheye warping function.

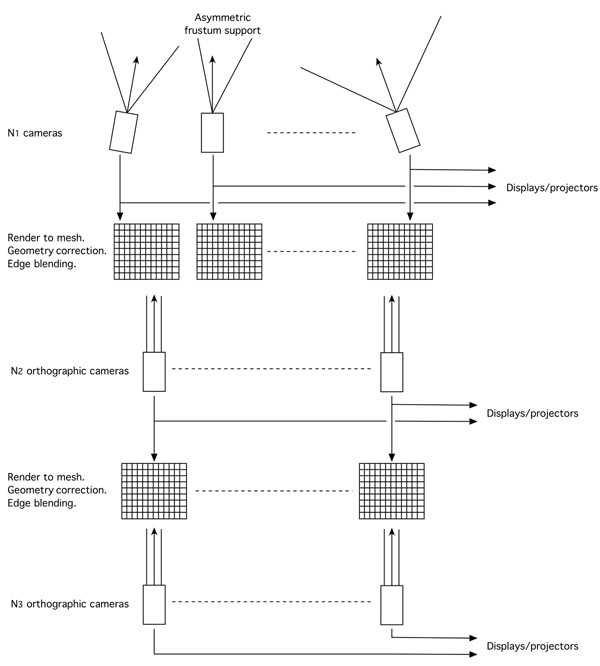

It should be pointed out that there are other methods of computing the required fisheye images using vertex shaders. These are not discussed here since they have some problematic aspects [Bourke, 2009] and they would seem hard to generalise. Implications for game developersThe key requirement is external control over the frustums being rendered and the optional warping mesh onto which those rendered frustums are applied. Some gaming engines such as the Unity and Quest3D support this through their comprehensive programming API but end product games could still support a wide range of immersive displays with a relatively simple model and end user interface. It should additionally be noted that providing the control over frustums as outlined here automatically provides support for many existing stereoscopic environments, notably the projected class of stereoscopic display based upon dual projectors. The exact model and interface a game that wishes to support these immersive displays should implement is not given here but the general requirements and pipeline shall be described. These are the same capabilities that are common place in virtual reality applications, simulators, and high end environments such as the CAVE [Cruz-Neira, 1993]. For practical purposes the discussion will be limited here to single computer based applications, the support required for genlocking between multiple computer displays is uncommon in the gaming industry and generally requires specialist graphics hardware. In addition it is argued that the increasing performance of the graphics hardware will make these multiple computer based system increasingly less necessary. The model presented assumes a number of virtual cameras, these are the inputs to the subsequent pipeline. The outputs consist of a number of displays, most games today assume only one camera and one display making them totally inadequate for the environments discussed here. There is no technical limit of a single display output, most graphics cards today have two output ports and this can be extended with multiple graphics cards. The number of outputs can also be extended with devices such as the Matrox [Marox] dual and triple head to go boxes (among others). Between the input and output there are two layers of render to texture with the textures applied to meshes, see figure 7. It is these meshes that can perform the geometry correction needed for curved surfaces and the edge blending needed for tiled displays. The use of texture meshes is important from the point of view of generalisation, the game need not be aware what the meshes do. It is envisaged that the mesh files will be supplied independent to the game developer by the supplier of the display or projection hardware. This is critical in the cases of many presentation hardware options which require an on-site calibration process.

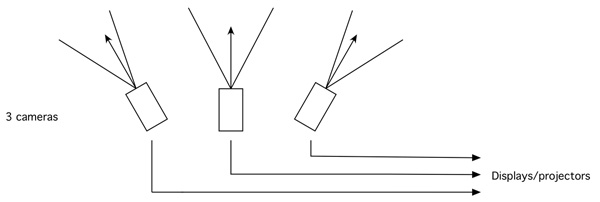

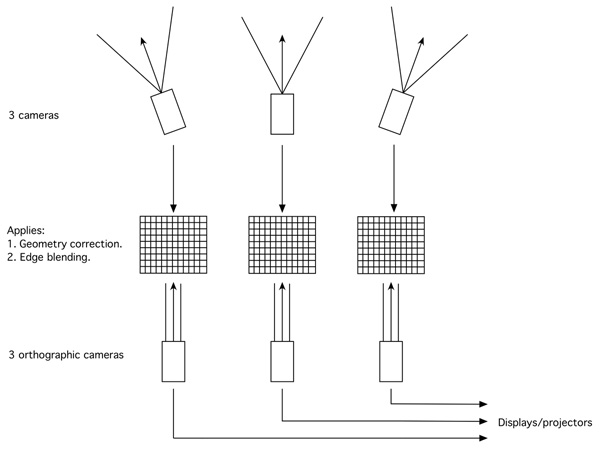

Some specific examples will be given of how the flow indicated in figure 7 may be configured for particular classes of immersive display. The first case in figure 1 has three disjoint screens or projections. Not surprisingly this is the simplest case, it only requires 3 cameras but each camera potentially needs an offset style frustum.

The examples in figure 2 and figure 3 introduced edge blended and geometry correction, both of these require one level of render to texture, see figure 9. The per colour (r,g,b) edge blending is achieved through multiplicative blending of the colour of the vertices to the render texture. Geometry correction can be achieved by either vertex positions or texture coordinates at each vertex, the most appropriate depends on the characteristics of the particular application and correction required. In all cases the end user or the hardware supplier provides this edge blending or warping data, in addition to the camera specifications and the resolution of the textures produced from each camera.

The final example illustrates one option for the iDome that employs both proposed layers of render to texture. While this could be simplified by the first layer of render to texture creating the geometry corrected fisheye directly, the scheme here can be extended to dome projection using multiple projectors by the second layer of orthographic projectors capturing segments of the fisheye image and applying those to a further mesh for the geometry correction and edge blending.

SummaryDespite seemingly clear advantages to players from gaming within a display that engages the peripheral vision of the player, they are almost universally unsupported even though support for the rarely used stereoscopic 3D is not uncommon. Indeed the energy of much of the gaming industry is towards platforms with even less immersive capabilities than standard home computers, namely the small low resolution screens of portable devices. The proposal here is that immersive gaming has performance and enjoyment benefits [Goslin, 1996] if only because it presents a virtual 3D world in a way that is closer to the way we experience our real 3D world. The challenge then is how can game developers provide support for the multiplicity of possible immersive gaming platforms that can range from discrete multiple displays surrounding the gamer to hemispherical displays such as the iDome. It can be shown that a relatively straightforward multiple camera, multiple render stage, and "render to texture" pipeline can support a wide range of these immersive displays. The advantage with modern graphics hardware is that we finally have the graphics processing required to implement such pipelines, which in the past were only available on multi-pipe workstation level hardware. The challenge is for developers to build the open ended, non-solution specific support required into their products. The discussion here has described the capabilities required.

Future workUsing the Unity3D game engine it is now possible to convert any particular game for a range of environments and compare player enjoyment and performance. In particular any Unity3D based game can be built for a single display, a discrete tiled display, a continuous cylinder, a stereoscopic wall, and the hemispherical iDome. In each case the display will employ projectors to create approximately the same visual dimensions, namely 3m, and therefore the same effective resolution. This range of gaming environments will allow player metrics to be measured and compared. Of particular interest is physical stresses, player gaming experience, sense of immersion, and performance. References[Bailey, 2006] M. Bailey, M. Clothier, N. Gebbie. Realtime Dome Imaging and Interaction: Towards Immersive Design Environments. Proceedings of IDETC/CIE 2006 ASME 2006 International Design Engineering Technical Conferences & Computers and Information in Engineering Conference September 10-13, 2006. [Barfield, 1995] Barfield, Woodrow and Danas, Eric. Comments on the Use of Olfactory Displays for Virtual Environments. Presence, Winter 1995, Vol 5 No 1, p. 109-121. [Bourke, 2008] P.D.Bourke. Low Cost Projection Environment for Immersive Gaming. JMM (Journal of MultiMedia), Volume 3, Issue 1, May 2008, pp 41-46. [Bourke, 2005] P.D. Bourke. Using a spherical mirror for projection into immersive environments. Graphite (ACM Siggraph), Dunedin Nov/Dec 2005.

[Bourke, 2004] P.D. Bourke.

Edge blending using commodity projectors.

[Bourke, 2004b] P.D. Bourke.

Image warping for projection into a cylinder. [Cruz-Neira, 1993] C. Cruz-Neira, D.J. Sandin, T.A. DeFanti. Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the CAVE. ACM SIGGRAPH 93 Proceedings. Anaheim, CA, pp. 135-142, August 1993. [Bourke, 2009] iDome: Immersive gaming with the Unity game engine] Accepted for Computer Games & Allied Technology 09. [DigitalTigers] Digital Tiger monitor products. http://www.digitaltigers.com/ [Elumenati] Elumenati: Immersive Projective Design. http://www.elumenati.com/ [GDC, 2008] GDC08: Enter the Z-Dome. http://www.joystiq.com/2008/02/21/gdc08-enter-the-z-dome/ [Goslin, 1996] M. Goslin, J.F. Morie. "Virtopia: Emotional experiences in Virtual Environments, Leonardo, vol. 29, no. 2, pp. 95-100. 1996 [Hillis, 1999] K. Hillis. Digital Sensations: Space, Identity and Embodiment in Virtual Reality. University of Minnesota Press, Minneapolis, MN. 1999. [jdome] jDome: Step into a larger world. http://www.jdome.com/ [Kang, 2006] D. Jo, H. Kang, G.A. Lee, W. Son. Xphere: A PC Cluster based Hemispherical Display System. IEEE VR2006 Workshop on Emerging Technologies. March 26, 2006. [Klein, 2005] D.H. Klein, G.J. Freimuth, S. Monkman, A. Egersdrfer, H. Meier, M. Bse, H. Baumann, Ermert & O.T. Bruhns. Electrorheological Tactile Elements. Mechatronics - Vol 15, No 7, pp 883-897 - Pergamon, September 2005.

[Matrox] Matrox Graphics eXpansion Modules: DualHead2Go and TripleHead2Go. [Miyashita, 1990] T. Miyashita, T. Uchida. Cause of fatigue and its improvement in stereoscopic displays. Proc. of the SID, Vol. 31, No. 3, pp. 249-254, 1990. [Yamazaki, 1990] T. Yamazaki, K. Kamijo, S. Fukuzumi. Quantitative evaluation of visual fatigue encountered in viewing stereoscopic 3D displays: Near-point distance and visual evoked potential study. Proc. of the SID, Vol. 31, No. 3, pp. 245-247, 1990. [Yanagida, 2004] Y. Yanagida, S. Kawato, H. Noma, A. Tomono, N. Tesutani. Projection based olfactory display with nose tracking. Virtual Reality, 2004. Proceedings. IEEE Volume , Issue , 27-31 March 2004 Page(s): 43 - 50. |