Extracting stereo pairs from 180 VR camerasWritten by Paul BourkeImage example courtesy of Ed Bates August 2019 Introduction In the current climate of interest in virtual reality and head mounted displays there are a number of cameras on the market designed to capture video for these devices. One category does not attempt to create full 360 stereoscopic panoramas, since that is frankly rather difficult to do well, but rather a left and right eye fisheye pair. This is generally called VR180, even though the lenses are often wider than 180 degrees. Within a head mounted display one gets a stereoscopic 3D depth effect including peripheral vision but without the ability to look around.

While these cameras are perfectly suited to creating content for head mounted displays, the fisheye projections are not suitable for viewing on flat screen stereoscopic projection systems or televisions displays. However the fisheyes so captured obviously include the necessary material for the limited field of view of these flat screen stereoscopic systems. All that is required is to extract the perspective projection at the desired field of view. Example The remainder of this document presents an example of this and the workflow one might engage. It is based upon the fish2persp utility by the author that can be implemented in a number of ways, including CPU based off-line processing, GPU as a GLSL shader, or as lookup remap images for ffmpeg or Nuke.

The fish2persp command line utility allows one to extract a perspective projection of any reasonable horizontal field of view (-t option), and orientated in any direction within the fisheye field of view. For example, to extract a perspective view from the center one might use: fish2persp -w 2100 -h 1200 -s 190 -r 1480 -c 1448 1436 -t 100 cam_left.jpg fish2persp -w 2100 -h 1200 -s 190 -r 1480 -c 1414 1426 -t 100 cam_right.jpg For the meaning of the other command line options the reader should refer to the documentation on the fish2persp web page. Once the fisheye is extracted then the stereoscopic pairs need to be aligned, in much the same way as one would align images from a standard stereoscopic camera. Any vertical misalignment corrected, this is typically due to inaccuracies in the camera/lens/sensor mechanical alignment. Secondly the zero parallax distance needs to be set, that is, the distance that will appear at the screen depth. This is typically an object close to the camera to reduce negative parallax objects cutting the display boundary. After selecting a feature to reside at the screen depth, one translates the stereo pairs so that this feature has zero parallax, in aligned in the two stereo pair images. After these two procedures the images would typically be cropped to the final desired resolution, in this case 1920x1080, note this alignment and cropping is the reason for choosing slightly larger perspective views of 2100x1200 in the command options (-w and -h).

An example looking down on the bench. fish2persp -w 2100 -h 1200 -s 190 -r 1480 -c 1448 1436 -t 100 -x 30 cam_left.jpg fish2persp -w 2100 -h 1200 -s 190 -r 1480 -c 1414 1426 -t 100 -x 30 cam_right.jpg

While the example above is using the CPU post processing approach, with a GLSL shader the above could readily be performed in real time allowing the viewer to interactively navigate within the fisheye bounds. Notes

ffmpeg remap filters

While the above is suitable for still images, converting movies from a VR180 camera is inefficient if the movie first needs to be converted to frames, each mapped to perspective, and then reconstituted into a standard perspective stereoscopic movie. The following is an exercise in creating a perspective stereoscopic movie from a movie recorded with the Insta360 Evo camera. Unlike most VR180 cameras, the Evo in the VR180 configuration automatically converts each eye from fisheye into half of an equirectangular, it then places these side-by-side in the equirectangular frame.

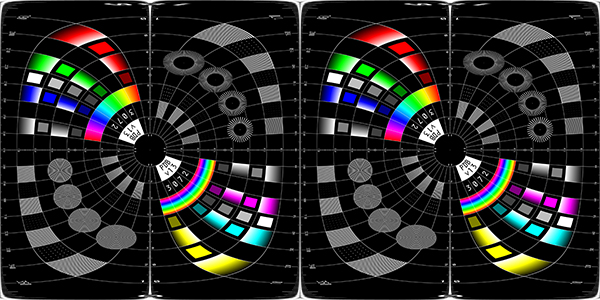

To extract a perspective view from an equirectangular the author has sphere2persp. To facilitate the extraction of a perspective projection, two additional capabilities have been added. The first is to support offset perspectives (see -ho option), this allows control over zero parallax distance when screen based viewing is required. This acts the same as so called "asymmetric perspective" in computer graphics or shift lenses in photography. The second is the generation of pgm files for the remap filter in ffmpeg (see -f option). Usage: ./sphere2persp [options] sphericalimage Options -w n perspective image width, default = 1920 -h n perspective image height, default = 1080 -t n FOV of perspective (degrees), default = 100 -x n tilt angle (degrees), default: 0 -y n roll angle (degrees), default: 0 -z n pan angle (degrees), default: 0 -vo n vertical offset for offaxis perspective (percentage), default: 0 -ho n horizontal offset for offaxis perspective (percentage), default: 0 -f create remap filters for ffmpeg, default: off -a n antialiasing level, default = 2 -n s output file name, default: derived from input image filename -d enable verbose mode, default: off The procedure then is to convert a single frame choosing the desired values of field of view, image dimensions and aspect, pan and tilt angles, along with optimal values of horizontal offset. The resulting PGM files are using in ffmpeg to extract a perspective from each half of the equirectangular movie, and also used to optionally combine those two halves back into a side-by-side stereoscopic movie. For example, creating PGM files for the perspective views for each half of the equirectangular might be done like the following. sphere2persp -f -w 1920 -h 1080 -t 110 -ho 5 -x 10 -z -90 testpattern.jpg sphere2persp -f -w 1920 -h 1080 -t 110 -ho -5 -x 10 -z 90 testpattern.jpg The above will create four PGM files, an x and y PGM for both the left and right eye. The pan of -90 and 90 is to position the default perspective camera on each half the equirectangular. The movie might then be converted into a left and right eye as follows # extract movies ffmpeg -i sourcemovie.mp4 -i leftx.pgm -i lefty.pgm -lavfi remap left.mp4 ffmpeg -i sourcemovie.mp4 -i rightx.pgm -i righty.pgm -lavfi remap right.mp4 # combine side-by-side ffmpeg -i left.mp4 -i right.mp4 -filter_complex hstack sbs.mp4 Note that the remap filters in ffmpeg are point sampling without any antialising. The solution to this is to extract the perspective at 2 or 3 times the desired final resolution, scaling the perspective movies down as a final pass. |